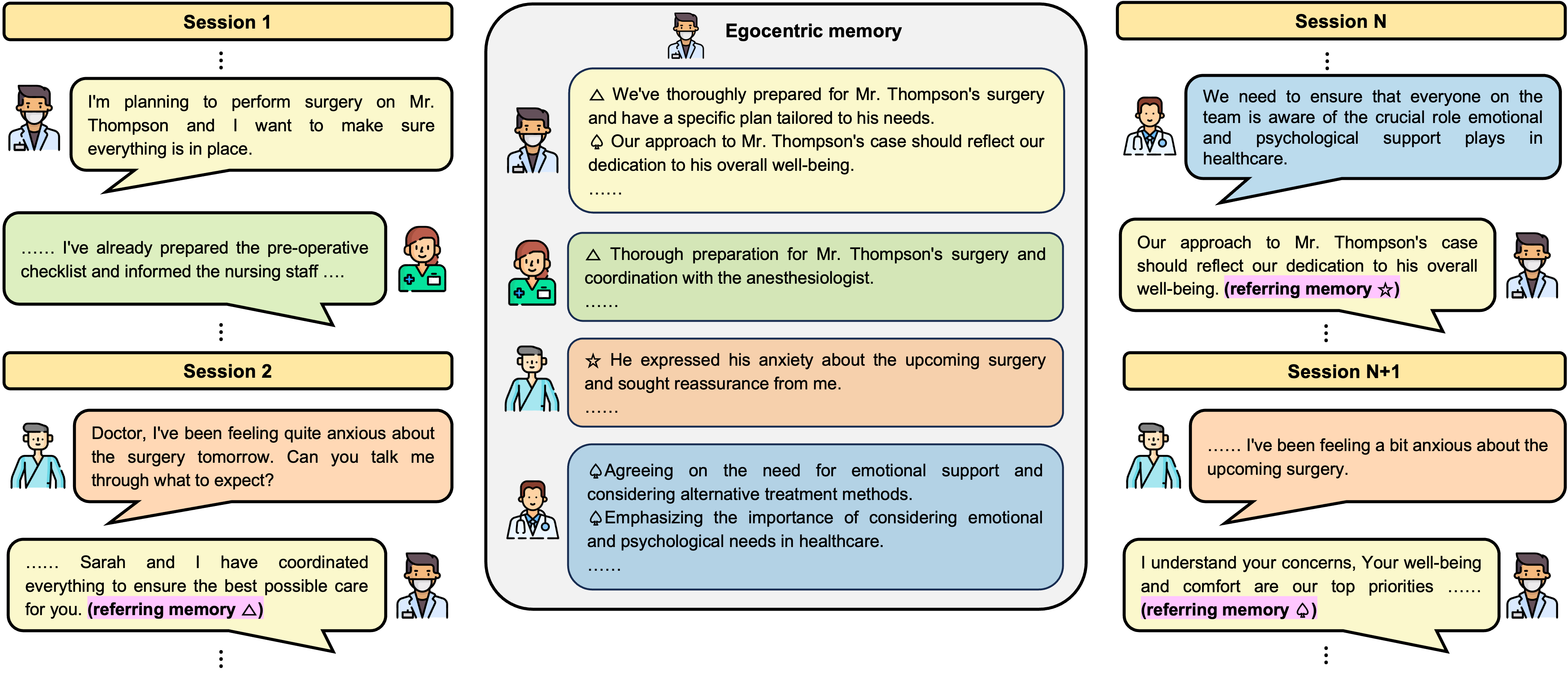

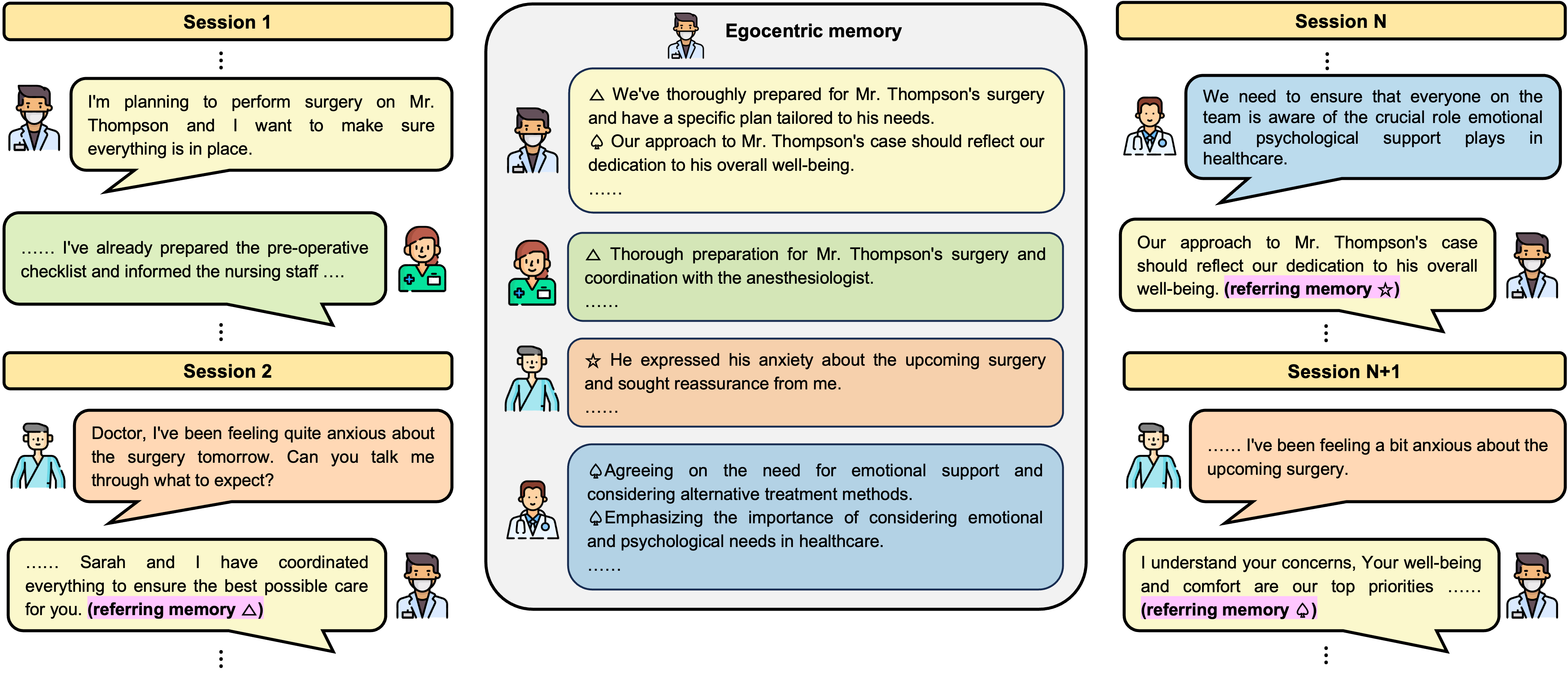

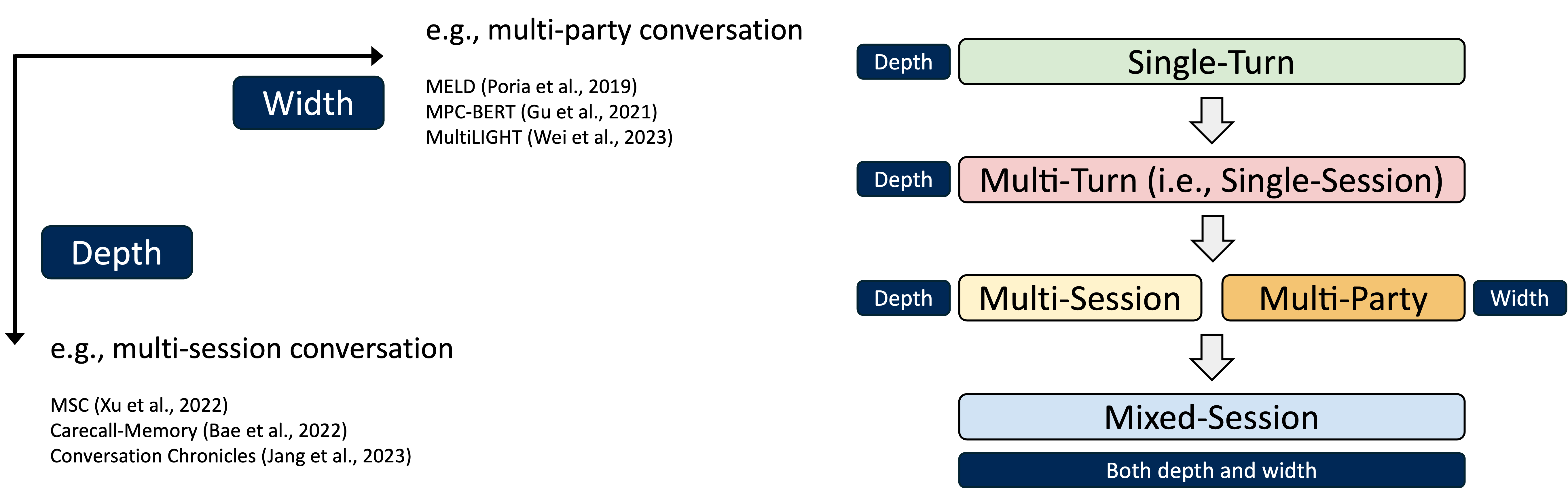

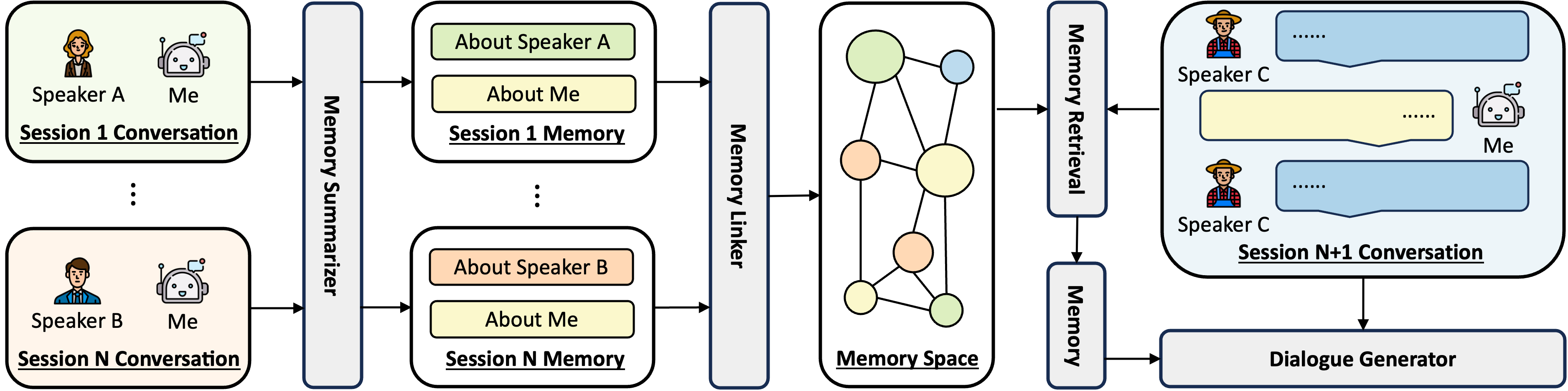

Recently introduced dialogue systems have demonstrated high usability. However, they still fall short of reflecting real-world conversation scenarios. Current dialogue systems exhibit an inability to replicate the dynamic, continuous, long-term interactions involving multiple partners. This shortfall arises because there have been limited efforts to account for both aspects of real-world dialogues: deeply layered interactions over the long-term dialogue and widely expanded conversation networks involving multiple participants. As the effort to incorporate these aspects combined, we introduce Mixed-Session Conversation, a dialogue system designed to construct conversations with various partners in a multi-session dialogue setup. We propose a new dataset called MiSC to implement this system. The dialogue episodes of MiSC consist of 6 consecutive sessions, with four speakers (one main speaker and three partners) appearing in each episode. Also, we propose a new dialogue model with a novel memory management mechanism, called Egocentric Memory Enhanced Mixed-Session Conversation Agent (EMMA). EMMA collects and retains memories from the main speaker's perspective during conversations with partners, enabling seamless continuity in subsequent interactions. Extensive human evaluations validate that the dialogues in MiSC demonstrate a seamless conversational flow, even when conversation partners change in each session. EMMA trained with MiSC is also evaluated to maintain high memorability without contradiction throughout the entire conversation.

| Width: | Expanding the number of participants involved (e.g., multi-party conversations) |

| Depth: | Sustaining conversation continuity over time (e.g., multi-session conversations) |

Speakers: Alice (Bob's teacher, Main Speaker) and Bob (Student)

⋮

Speakers: Alice (Bob's teacher, Main Speaker) and Henry (Bob's father)

⋮

MSC 2.7B (Xu et al., 2022)

ReBot (Jang et al., 2023)

EMMA (Ours)

Current session speakers: Sophia (Leo's teacher, Main Speaker) and Ava (Leo's mom)

⋮

@article{jang2024mixed,

title={Mixed-Session Conversation with Egocentric Memory},

author={Jang, Jihyoung and Kim, Taeyoung and Kim, Hyounghun},

journal={arXiv preprint arXiv:2410.02503},

year={2024}

}